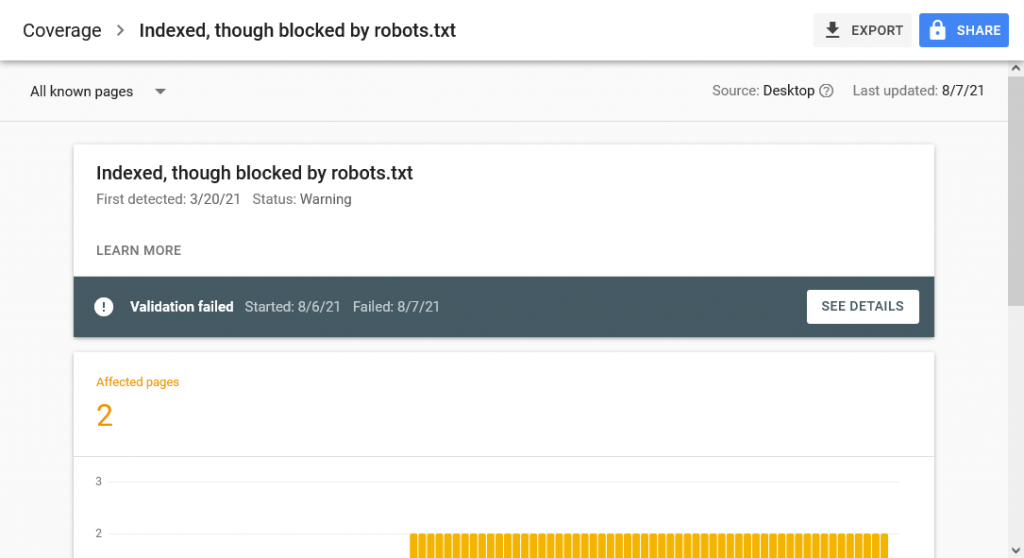

Having trouble getting Google Search Console to give you a green light on your fix for Indexed, though blocked? Read on.

When I first attempted to fix the ‘indexed, though blocked by robots.txt’ warning, I thought to myself, “easy! I’ll just add a noindex in my robots.txt and sail off into the sunset”, right? Wrong!

Back in 2019, Google released a note on unsupported rules in robots.txt, which I conveniently happened to miss. Among the revelations was the notice that adding noindex directives within a robots.txt file would no longer be a supported feature.

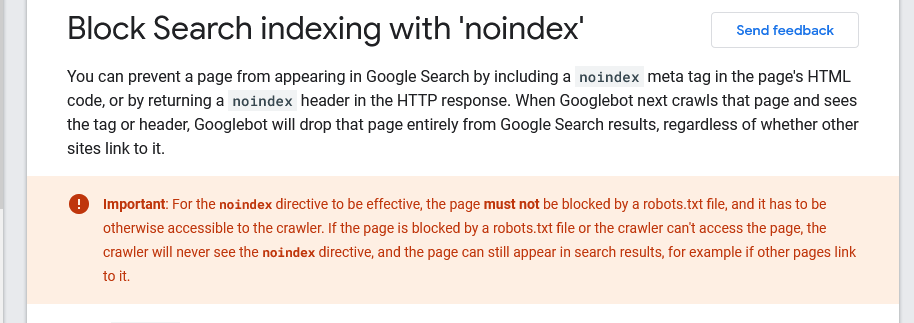

What can you do about it? The solution is to use noindex robots meta tags. But keep in mind that for the noindex directive to be effective, the page mustn’t be blocked by a robots.txt file.

For more on this, check Google’s article called Block Search indexing with ‘noindex’. I’ve attached a screenshot from that page below.

Indexed, though blocked Examples

Here’s what one of these bad boys might look like:

<meta name='robots' content='max-image-preview:large, noindex, nofollow, noarchive, nosnippet' />My page already had the noindex robots meta tag, but what I didn’t realize was that because I had blocked search engines using a disallow rule in my robots.txt, Google (which no longer supports noindex directives within robots.txt) couldn’t get a chance to see my noindex robots meta tag.

Here’s a good example of what your robots.txt file shouldn’t look like if you’re trying to rid yourself of the warning ‘Indexed, though blocked by robots.txt’:

User-agent: *

Disallow: /user-agreements/

Disallow: /merchant-policy/

Noindex: /user-agreements/

Noindex: /merchant-policy/To summarize, all you have to do is remove the disallow rule in robots.txt and add the noindex robots meta tag 🎉.

If you’re having trouble with something WordPress-related, there’s a small chance I have a fix for it on my blog, check it out!

And if you found this article helpful, please make my day by letting me know in the comments below, talk soon 🙂.

Where there is a will, there is a way.

I agree 😃🤝